Unlock the Full Potential of Generative AI with Prompt Engineering!

In this post, we delve into the basics of prompts and explore how prompt engineering can greatly enhance the handling of LLMs, complete with vivid illustrations and real-world examples.

Disclaimers

This disclaimer pertains to the code and output presented in this post. It's worth noting that the ChatGPT model is large and inherently random, so the exact results shown here may not be reproducible. However, the output shown is authentic and the explanations provided for each output are logically sound.

Contents

Prompt Introduction

Have you ever wondered what sets ChatGPT or any other large language models apart from the rest? The answer is simple – Prompt Engineering! As a cutting-edge discipline in the field of generative AI, Prompt Engineering is the key to unlocking the full potential of language models and generative AI tools.

Think of Prompt Engineering as an elevated form of programming that transforms AI models into powerful creative tools. By crafting effective prompts, you can guide generative models toward producing high-quality outputs, unlocking new possibilities, and unlocking their full potential.

Join me in this post as I delve into the exciting introduction of Prompt Engineering and explore the art and science of crafting the perfect prompts. Discover how these powerful tools can be used for a wide range of applications, including creative writing, answering questions, summarizing text, extracting data, and many more. With the right prompts, the possibilities are endless!

Note, not only prompt engineering but also tuning the API parameters are equally important to get a high-quality response from large language models. You can consider reading Deep dive into OpenAI completion parameters.

How do prompts look?

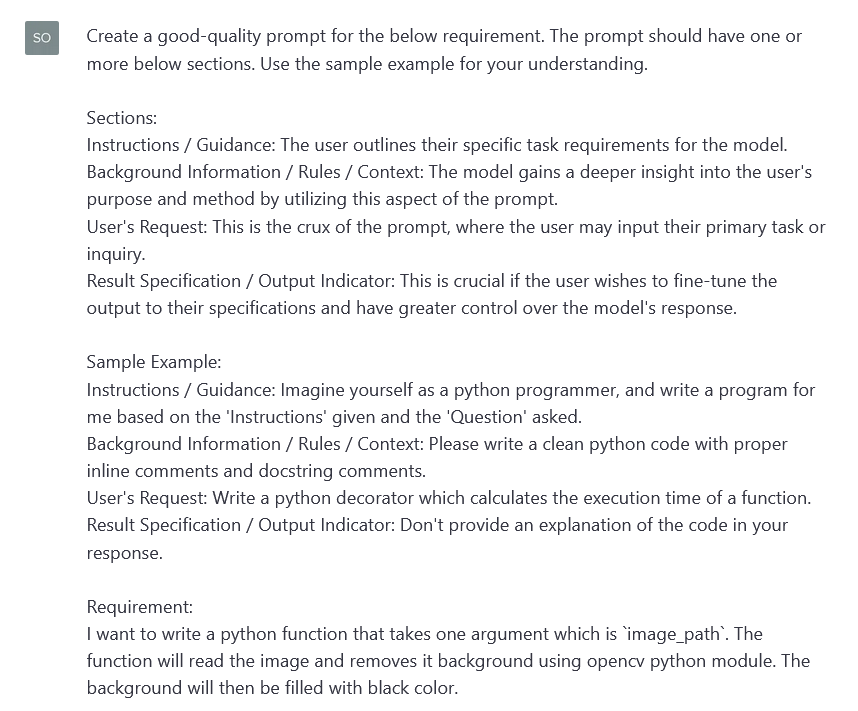

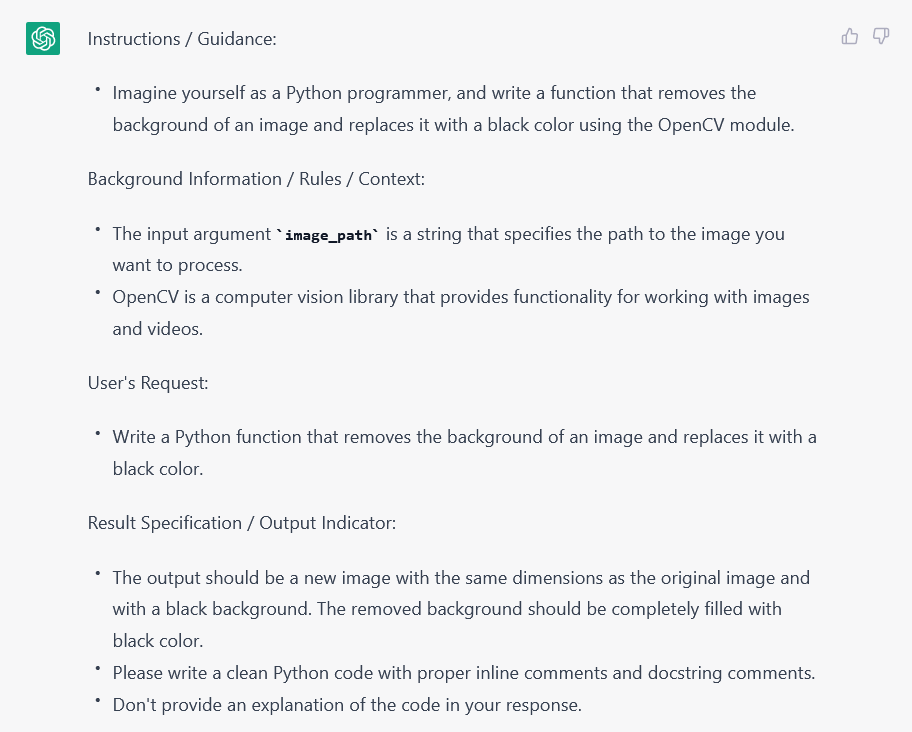

The blueprint for prompts is flexible and tailored to fit the specific goals and outcomes desired by the user. The field of generative AI is constantly in flux and researchers are exploring the complexities of prompts, seeking to unlock new and improved methods. In this write-up, I aim to showcase a general framework for an impactful prompt and how to harness its power. Keep in mind, this is not a strict rule, but rather a suggested structure that has been proven to produce impressive results through various trials.

A good prompt has the potential to incorporate one or multiple of the following key elements.

Instructions / Guidance: The user outlines their specific task requirements for the model.

Background Information / Rules / Context: The model gains a deeper insight into the user's purpose and method by utilizing this aspect of the prompt.

User's Request: This is the crux of the prompt, where the user may input their primary task or inquiry.

Result Specification / Output Indicator: This is crucial if the user wishes to fine-tune the output to their specifications and have greater control over the model's response.

It's worth noting that not all problems necessitate the use of all components, but a first-rate prompt typically incorporates at least one or several of the above.

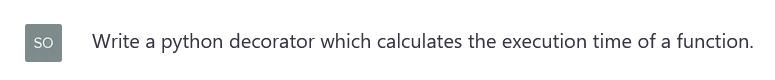

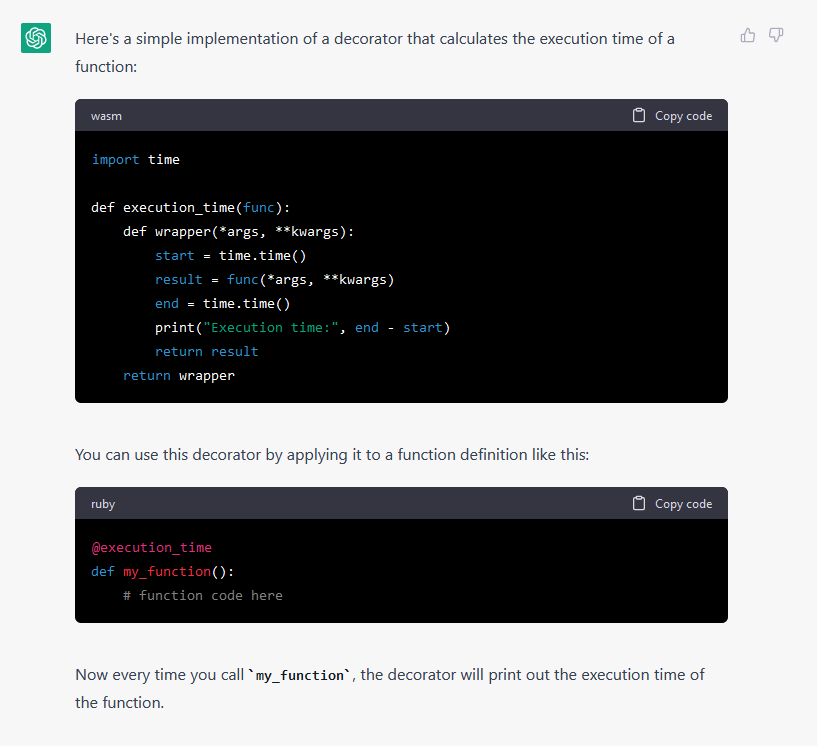

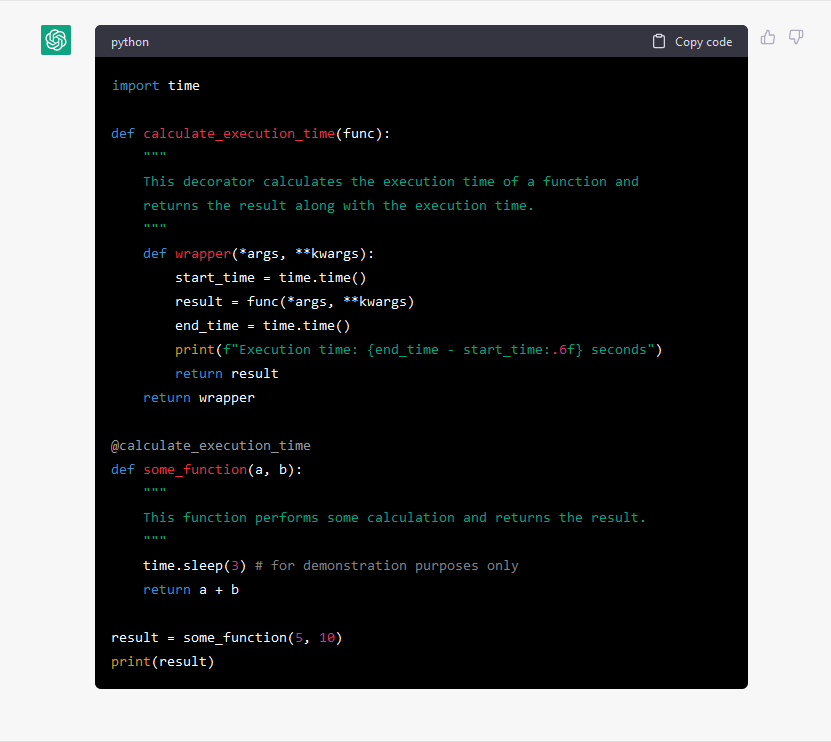

Let us see an example with a normal prompt and a good prompt.

Normal Prompt

Good Quality Prompt

Illustrated by these two instances, the art of crafting thought-provoking prompts and their sophisticated execution can significantly influence the outcome of the response. The code produced by ChatGPT is impeccable, yet these examples highlight the power of designing good-quality prompts.

Some Good Practices

Impersonation

Impersonation is a clever technique that hones large language models by training them on examples crafted to imitate the unique style of a person or group. This technique proves invaluable in generating prompts for these models as it enables the model to grasp the subtle intricacies of a particular style of writing or speaking, resulting in text that resembles it in tone, vocabulary, and grammar.

For instance, if you wish to develop a prompt for a language model that can mimic the writing style of a specific author, you could provide the model with an ample corpus of text written by the author and fine-tune it with the impersonation technique. This would educate the model to produce text in a style similar to the author, giving you the ability to craft prompts that are tailored to that specific style.

This technique also has a multitude of applications, such as creating chatbots that can mimic the speech patterns of individuals, or generating marketing content with a tone comparable to a brand. It can be used to create prompts for other language models like dialogue models or summarization models.

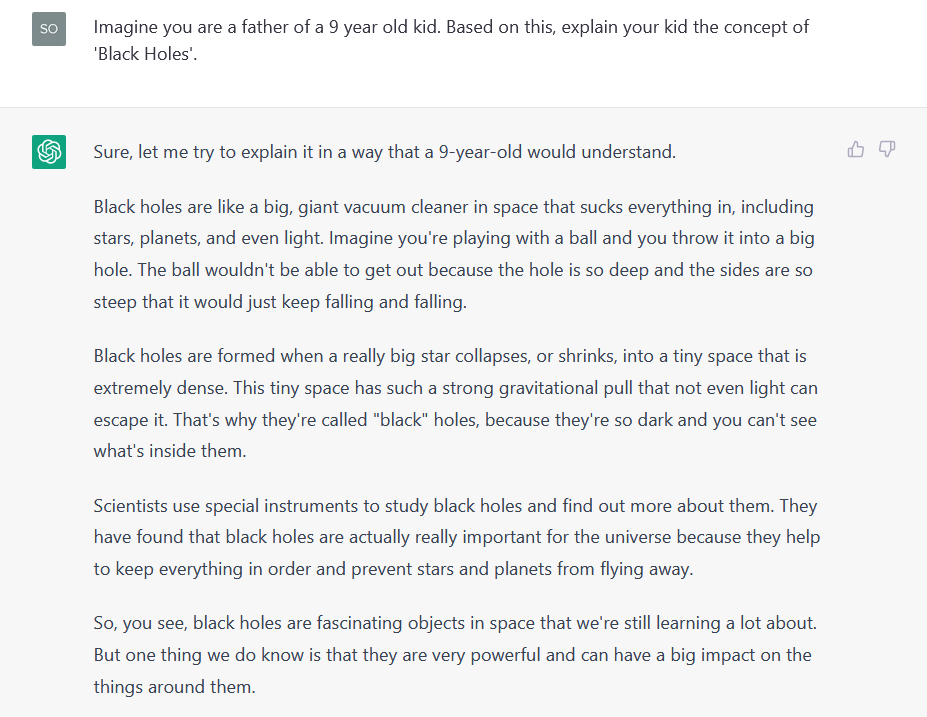

Let’s take an example to visualize the difference.

It's almost magical how ChatGPT effortlessly embodies the persona I assign to it. The results are simple to the point, as the text generated includes a captivating aura, matching the spirit of the impersonated entity. Of course, the accuracy of the response is a whole other matter to consider, but for now, let's consider the glory of its impeccable style.

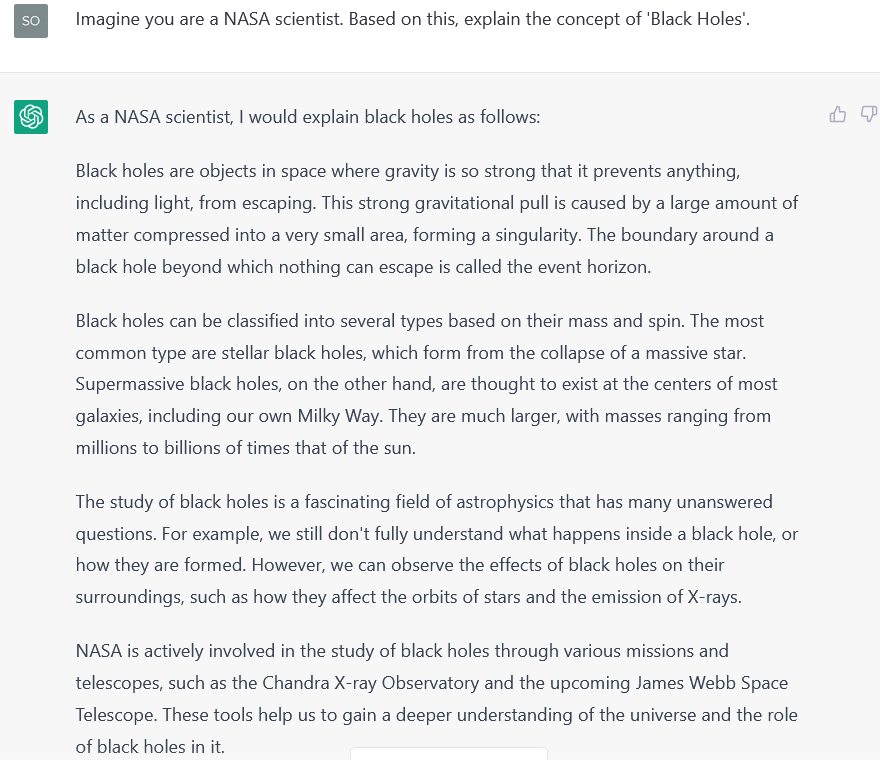

Anyway, another example is on the way.

Fascinating right? Again, I won’t go into the validation and truthfulness of the response, rather the style and tone it captures based on different personas are really amazing.

Few-shot Prompting

This technique is revolutionizing the training process of large language models by allowing them to generate text based on just a few examples.

The concept is simple yet effective. By training the model on a small set of examples, it can learn to generate text that resembles those examples, even if it hasn't seen a vast number of examples of that particular style. This makes it possible to train language models to perform specific tasks or generate text in a specific style, even with limited training data.

Let me give you an example. If you wanted to train a language model to generate fiction in the style of your favorite author, all you would need to do is provide a few examples of their writing as input. The model would then learn to generate text that resembles that author's unique writing style.

In conclusion, Few-shot Prompt engineering is a valuable tool for fine-tuning large language models to perform specific tasks or generate text in a particular style, even with limited training data. This technique is definitely worth exploring for anyone looking to expand their knowledge of language models and AI technology.

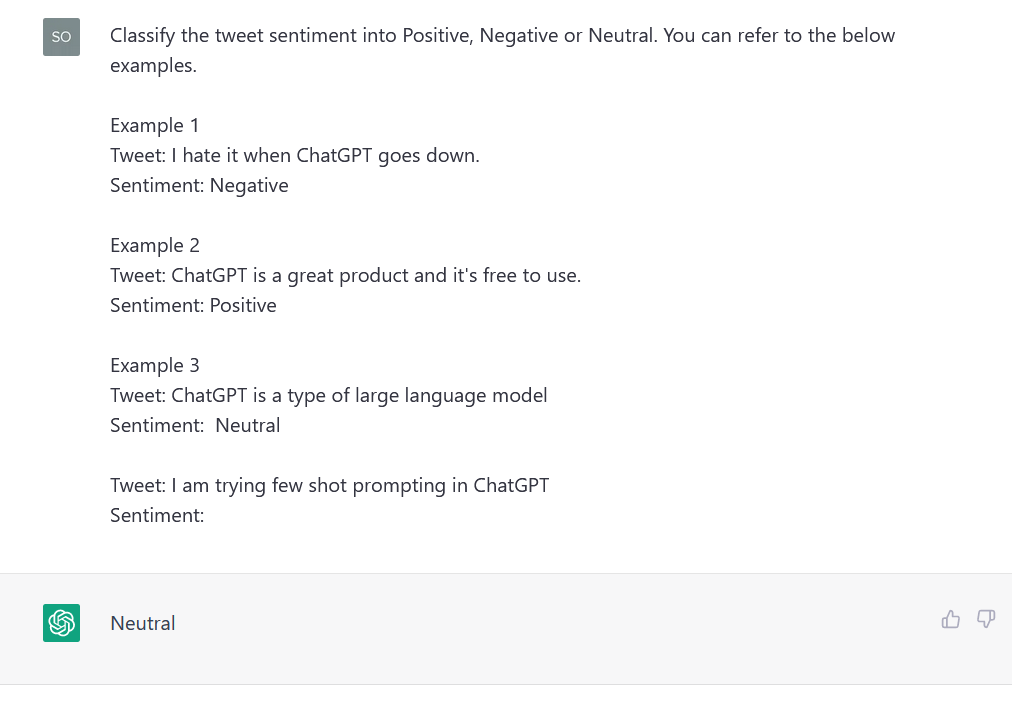

Even though I refer to it as a training process, it's not true training (at least for the next example). Instead, it will include a few examples that are part of the prompt, so that the language model can comprehend them and produce a similar response.

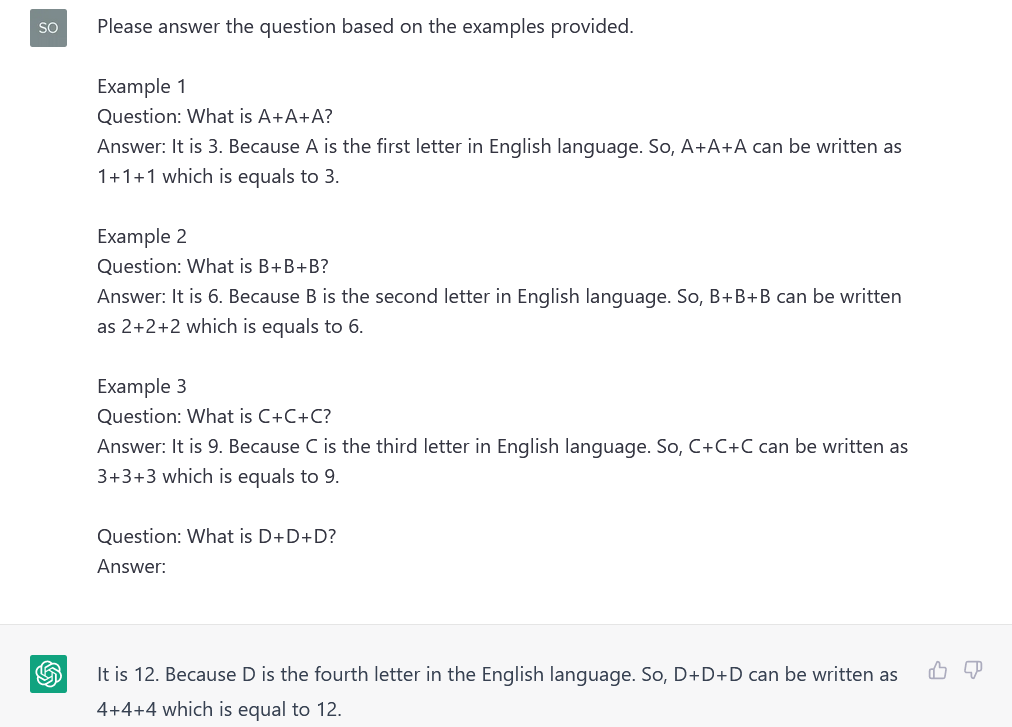

Here I crafted the prompt using three examples in it. If only one example is used, it's called one-shot prompting, and if there are no examples, it's referred to as zero-shot prompting.

You may be wondering why the examples were necessary for the above scenario. ChatGPT has the ability to classify tweets without them. I was simply demonstrating how few-shot learning works. Now let's take a look at a reasoning question I'll ask ChatGPT without providing any examples. Observe how ChatGPT responds.

You may also be wondering what kind of question I asked, correct? Now let's clarify with some additional information (few-shot prompts) what I was actually trying to ask.

I believe now you accept, how powerful few-shot prompting is. Don’t you agree?

Recursive Prompt Crafting

When creating prompts, it may be helpful to utilize ChatGPT as a tool. We may be familiar with the principles of creating effective prompts, but struggle to come up with the right words and phrases. Utilizing ChatGPT can provide us with a starting point, even if the prompts generated may not be of the highest quality. Through iteration and experimentation, we can refine the prompts until they meet our desired specifications. An example is included below.

Conclusion

In conclusion, prompt engineering is a crucial aspect of the development of generative AI models, particularly in the context of large language models. By carefully crafting prompts, we are able to steer the output of these models in a desired direction and unlock their full potential. With the right prompts, we can generate high-quality, relevant and diverse outputs that are useful in various real-world applications. This blog post provided a comprehensive overview of the basics of prompts and how prompt engineering can be leveraged to enhance the handling of LLMs. The examples and illustrations used in this post showcase the power of prompt engineering and highlight the importance of considering this aspect in the development of generative AI models. It is worth noting that prompt design is an iterative and experimental process, that can take a lot of time (based on the usecase).

Going forward, I aim to provide more advanced discussions on prompt engineering, prompt optimization, and prompt management, including comprehensive code examples and libraries. This area of research is ongoing and I will continue to stay informed and incorporate the latest developments into my future blog posts.

Thank you for reading this article. Consider subscribing to this post if you found its contents valuable and enlightening. Your support will be a source of inspiration for crafting future posts that are equally informative and engaging.